Synopsis

Virtual Reality (VR) has the potential to help improve our understandings in different research areas like mental heath, performance and user experience. In the area of Human Computer Interaction (HCI), and with a focus on the area of auditory-visual associations, VR can help gain new insights into how we associate between our senses. I propose to create an auditory-visual association test in VR, based on the standard tests available in research today. With the more immersive environment of VR, will the results from the newly proposed VR application differ from results obtained from standard testing methods used today? The knowledge gained from the proposed study and the accompanying VR application can help shape future interface design, in terms of adaptability and personalisation.

Introduction

Many interesting insights have developed out of research between the auditory and visual senses, and have shaped the way interactions between humans and machines are designed. With newer technologies bringing a higher level of immersion, like Virtual Reality (VR), we can further develop the way humans interact with machines. Research using VR has produced new and interesting knowledge in different areas that might not have been found using more traditional techniques.

This paper will propose an new application in VR, that will engage with a person to find how they associate between the auditory and visual senses, and the VR application will be tested against the traditional testing method available today. From comparing the data between the two tests, an analysis will be conducted to see if there is any difference in the strength of the auditory-visual associations, with findings confirming or denying that immersive environments can have an impact on our understanding of connections between our senses.

The next section will give more insights into research in Auditory-Visual associations and Synaesthesia; Standard Association tests; Virtual Reality and the Proposed Study.

environment on the left is visible and can interact with the different colored objects. When they find an agreeable sound-colour

association, they can put the coloured object on the plinth to confirm their choice.

Background

Auditory-Visual Associations and Synaesthesia

Past research shows significant auditory-visual associations between pitch and brightness [3, 5, 16]; musical key and brightness [10]; timbre and saturation; loudness and brightness and pitch and size, as well as others [14]. A study by Palmer et al. [10], confirmed that emotion has an impact on how we associated between sound and colour. Their work was called the emotion mediation hypothesis, and was tested on associations between sound and colour, with results showing a high consistency for the hypothesis.

Research into the condition called Synaesthesia, which effects roughly 2-4% of the population, has provided valuable knowledge on normal cross-modal associations [3]. Synaesthesia can be described as when one sense (an inducer) receives stimulation that triggers a reaction in a second sense (a concurrent) that is unstimulated [3]. It has be found that synaesthesia is seven times more common in creative people than the general population [12].

Standard Associations Tests

To date, there has been several different tests created to help understand how associations between our senses are made, specifically between the auditory and visual senses, which include tests to identify synaesthesia and which type of synaesthete a person could be. Some of the widely used test in research are, Implicit Association Test (IAT) [7]; Test of Genuineness (TOG-R) [1]; Synaesthesia Battery [2, 4] and NeCoSyn test [15].

While the TOG, Synaesthesia Battery and NeCoSyn are more generally used to test for synaesthesia, the IAT test has be used for both synaesthesia tests and tests involving non-synaesthetes. A study by O’Toole et al. [9], found that the Synaesthesia Battery test produced interesting insights into participants who took the test but were not classified as a synaesthete. The results showed that there was a variety for scores on the spectrum between high and low auditory-visual associations. Open-ended questions at the end of the test confirmed a difference in a participants’ thought process on their associations depending on if got a higher score (closer to synaesthete level) or a lower score [9]. The different tests for synaesthesia mentioned above are all very similar, with The Synaesthesia Battery and NeCoSyn tests being alternative versions of the test of genuineness [2, 15].

Virtual Reality

Virtual reality technologies are becoming increasingly low cost and available to the general population, which has allowed more research to be conducted using these new technologies [8, 17]. With VR being a more interactive and immersive technology, it has the potential to be used in scientific research as a tool to conduct studies in areas where immersion is an important aspect that could change the outcome of results. Studies in VR could replace the standard research tools that do not provide the level of immersion needed, helping to build on knowledge in many areas of research.

Research in VR environments have already produced interesting results in similar fields and topics closely related to auditory-visual associations. [11] conducted an experiment to compare stress perception in VR and on a regular desktop. With the use of a Stroop word-colour test, they found in the VR environment that stressful tasks were perceived to be less stressful than on a desktop if no additional stress factors, such as head movement were involved. The authors followed up the study by creating an open-source Stroop room, which is a virtual reality enhanced Stroop test. In this study, they found that some metrics improved between 30-40% compared to the traditional Stroop test [6].

In a study by Wu et al. [18], they looked to understand the optimal arousal level for an individual to improve performance. A word-colour Stroop test was conducted in VR, with different levels of stress in the form of driving through a wartime scenario presented in the background. An optimal arousal level was identified and they concluded that moderate levels of arousal lead to improved performance.

Emotion is area that is also important to understand with regard to auditory-visual associations, and as mentioned in the background section, these associations are mediated by emotion. The benefit of VR as an affective medium has been highlighted in research as well. Riva et al. [13] conducted a study on the link between presence and emotions using virtual environments that produced different emotional feelings, such as relaxation and anxiety. Using three virtual experiences of a Park (Neutral, Relaxed and Anxious), the study showed that VR can be an effective mood induction medium and that the affective parks (Relaxed and Anxious parks) elicited specific emotional states.

The studies presented above, as well as many others, show the potential of VR to be used in research that would benefit from a more immersive environment. It has been shown to be effective in gaining new insights into areas such as affect, performance, stress and creative design to name a few. I will briefly highlight the proposed study next before outlining the technical requirements and the testing application.

Proposed Study

The study I propose will look to compare users strength in auditory-visual associations, using a traditional association tests and a similar test created in VR. From this study we hope to understand the impact of immersion and the interactivity of VR can have on how we associate between our auditory and visual sense. Participants will be asked to take one of the standard auditory-visual association tests used in research presently and then ask to take the test using the VR application at a later date. Results will be compared to understand if any differences can be seen between the two tests.

Technical Equipment

The application I proposed in previous section, will capture the auditory-visual test data for each participant session. The VR headset will be capable of capturing tracking data from the joysticks and head mounted display (HMD), and also output audio. The basic application will not need an extra technologies, like gestural gloves or Electromyography (EMG) sensors, but as we improve the application, these added devices could help gain further insights into the auditory-visual associations.

Hardware

There are a few options for VR headsets on the market today and they are becoming more affordable. While headsets like the HTC Vive Pro and Valve Index look like good options, Meta’s Oculus Quest 2 is very affordable and has great support for many software packages that help create VR environments. The Oculus Quest 2 device does not need to be tethered to a computer and has the capacity to capture and store data on the device until after the testing session has concluded.

Software

The VR application will be created in Unity, which is a well supported game engine that provides a wide community of users and contributors. It is a well supported software program with a considerable range of assets available for free use or to purchase via their assets store. Unity also has a very good learning pathway, tutorials and documentation on how to create using their platform.

stimuli.

Proposed VR Auditory-Visual Test

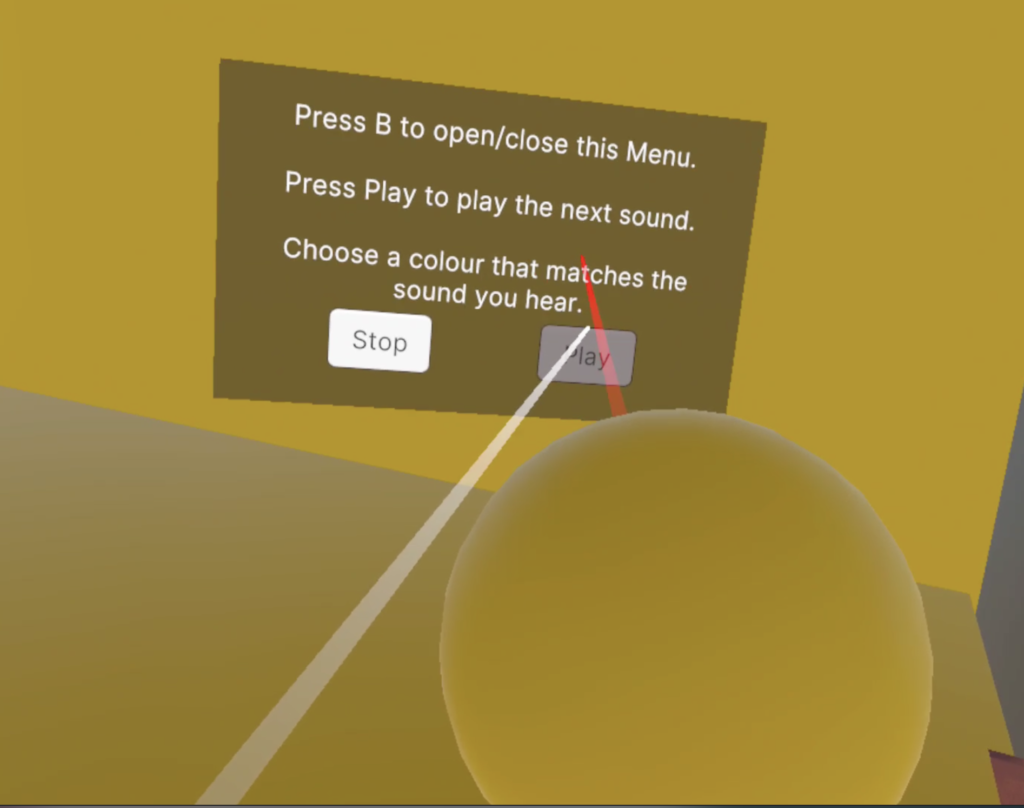

The proposed VR application will take aspects of the different tests mention in the background section and recreate them in a more immersive space. Some of these tests use standard colour charts with a fixed number of choices [1], others use more complex systems, like the Natural Color System (NCS), giving the user more choice and detail in their colour choice [3]. For the VR application, a fixed number for colours would be a better option with each colour being attached to an inter-actable object, to aid in creating a more immersive environment, as shown in Figure 1.

The basic test will look for associations between single musical notes and colours, with 13 notes (12 notes from a scale and 1 higher note) each repeated three times, similar to [2, 4]. Other tests that can be performed could involve testing instrument timbre or musical chords, which are part of the Synaesthesia Battery test [2, 4].

The auditory stimuli will consist of high quality recording of the 13 notes on a piano/keyboard that will play on a loop until the user’s choice is confirmed with in the VR environment. Different colours being applied to inter-actable objects, the confirmation of the participant’s choice can be done by placing the object on a plinth (see Figure 2). When the coloured object is picked up, the whole environment can change to match the participant’s choice. This can be displayed in different ways, either as a solid colour that fills the room (see Figure 2) or also as a fog-type animation that moves across the user’s visual field.

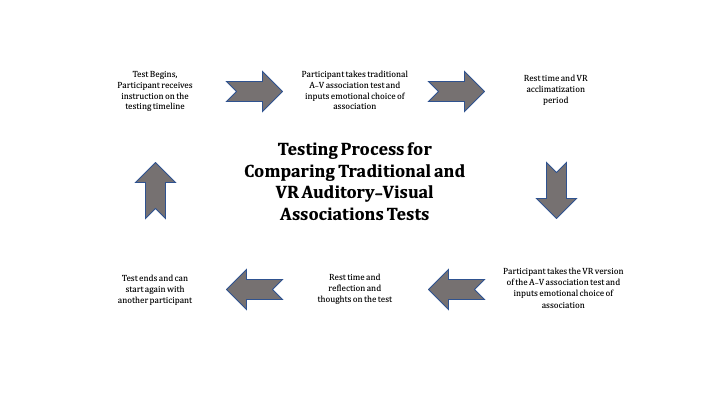

A user’s study using the System Usability Scale (SUS) will be conducted to test the application and see which of the different set up options provides a more immersive experience. From the SUS feedback, the finished application can be developed that will be used in the research study mentioned above. Participants’ will be asked to attend a session to take a standard auditory-visual association test and then to take the VR version of the test at a later stage (see the testing process on Figure 3). The results will then be compared to investigate any differences between the two tests and to see if the more immersive environment can produce a stronger association between the two senses.

Conclusion

Moving auditory-visual association tests into a VR space could have a great impact on future research in the area. Using VR applications as learning tools for strengthening associations between our senses could have an impact on how we create interfaces and interactions in the digital space in the future. Being able to make interfaces and interactions with technology more adaptable and personalised will help in developing systems that can be used universally by a diverse population of people. As well as the applications benefits in the world outside VR, it can also help understand how we can implement cross modal associations between our senses in VR experiences and games to create more immersion and connection to the narrative of the experience.

References

[1] J Asher, M Aitken, N Farooqi, S Kurmani, and S Baroncohen. 2006. Diagnosing and Phenotyping Visual Synaesthesia: A Preliminary Evaluation of

the Revised Test of Genuineness (TOG-R). Cortex 42, 2 (2006), 137–146. https://doi.org/10.1016/S0010-9452(08)70337-X

[2] D.A. Carmichael, M.P. Down, R.C. Shillcock, D.M. Eagleman, and J. Simner. 2015. Validating a Standardised Test Battery for Synesthesia: Does the

Synesthesia Battery Reliably Detect Synesthesia? Consciousness and Cognition 33 (May 2015), 375–385. https://doi.org/10.1016/j.concog.2015.02.001

[3] Caroline Curwen. 2018. Music-Colour Synaesthesia: Concept, Context and Qualia. Consciousness and Cognition 61 (May 2018), 94–106. https://doi.org/10.1016/j.concog.2018.04.005

[4] David M. Eagleman, Arielle D. Kagan, Stephanie S. Nelson, Deepak Sagaram, and Anand K. Sarma. 2007. A Standardized Test Battery for the Study

of Synesthesia. Journal of Neuroscience Methods 159, 1 (Jan. 2007), 139–145. https://doi.org/10.1016/j.jneumeth.2006.07.012

[5] Aviva I. Goller, Leun J. Otten, and Jamie Ward. 2009. Seeing Sounds and Hearing Colors: An Event-related Potential Study of Auditory–Visual

Synesthesia. Journal of Cognitive Neuroscience 21, 10 (Oct. 2009), 1869–1881. https://doi.org/10.1162/jocn.2009.21134

[6] Stefan Gradl, Markus Wirth, Nico Mächtlinger, Romina Poguntke, Andrea Wonner, Nicolas Rohleder, and Bjoern M. Eskofier. 2019. The Stroop Room:

A Virtual Reality-Enhanced Stroop Test. In 25th ACM Symposium on Virtual Reality Software and Technology. ACM, Parramatta NSW Australia,

1–12. https://doi.org/10.1145/3359996.3364247

[7] Anthony G. Greenwald and Mahzarin R. Banaji. 1995. Implicit Social Cognition: Attitudes, Self-Esteem, and Stereotypes. Psychological Review 102, 1

(1995), 4–27. https://doi.org/10.1037/0033-295X.102.1.4

[8] S. Malpica, A. Serrano, M. Allue, M. G. Bedia, and B. Masia. 2020. Crossmodal Perception in Virtual Reality. Multimedia Tools and Applications 79,

5-6 (Feb. 2020), 3311–3331. https://doi.org/10.1007/s11042-019-7331-z

[9] Patrick O’Toole, Donald Glowinski, and Maurizio Mancini. 2019. Understanding Chromaesthesia by Strengthening Auditory -Visual-Emotional

Associations. In 2019 8th International Conference on Affective Computing and Intelligent Interaction (ACII). IEEE, Cambridge, United Kingdom, 1–7.

https://doi.org/10.1109/ACII.2019.8925465

[10] S. E. Palmer, K. B. Schloss, Z. Xu, and L. R. Prado-Leon. 2013. Music-Color Associations Are Mediated by Emotion. Proceedings of the National

Academy of Sciences 110, 22 (May 2013), 8836–8841. https://doi.org/10.1073/pnas.1212562110

[11] Romina Poguntke, Markus Wirth, and Stefan Gradl. 2019. Same Same but Different: Exploring the Effects of the Stroop Color Word Test in Virtual

Reality. In Human-Computer Interaction – INTERACT 2019, David Lamas, Fernando Loizides, Lennart Nacke, Helen Petrie, Marco Winckler, and

Panayiotis Zaphiris (Eds.). Vol. 11747. Springer International Publishing, Cham, 699–708. https://doi.org/10.1007/978-3-030-29384-0_42

[12] Vilayanur S. Ramachandran and Edward M. Hubbard. 2003. Hearing Colors, Tasting Shapes. Scientific American 288, 5 (2003), 52–59.

[13] Giuseppe Riva, Fabrizia Mantovani, Claret Samantha Capideville, Alessandra Preziosa, Francesca Morganti, Daniela Villani, Andrea Gaggioli,

Cristina Botella, and Mariano Alcañiz. 2007. Affective Interactions Using Virtual Reality: The Link between Presence and Emotions. CyberPsychology

& Behavior 10, 1 (Feb. 2007), 45–56. https://doi.org/10.1089/cpb.2006.9993

[14] Charles Spence. 2011. Cross-modal Correspondences: A Tutorial Review. Attention, Perception, & Psychophysics 73, 4 (May 2011), 971–995.

https://doi.org/10.3758/s13414-010-0073-7

[15] Crétien van Campen and Clara Froger. 2003. Personal Profiles of Color Synesthesia: Developing a Testing Method for Artists and Scientists. Leonardo

36, 4 (Aug. 2003), 291–294. https://doi.org/10.1162/002409403322258709

[16] J Ward, B Huckstep, and E Tsakanikos. 2006. Sound-Colour Synaesthesia: To What Extent Does It Use Cross-Modal Mechanisms Common to Us

All? Cortex 42, 2 (2006), 264–280. https://doi.org/10.1016/S0010-9452(08)70352-6

[17] Victoria Wright and Genovefa Kefalidou. 2021. Can You Hear the Colour? Towards a Synaesthetic and Multimodal Design Approach in Virtual

Worlds. In 33rd British Human Computer Interaction Conference. British HCI Conference, London.

[18] Dongrui Wu, Christopher G. Courtney, Brent J. Lance, Shrikanth S. Narayanan, Michael E. Dawson, Kelvin S. Oie, and Thomas D. Parsons. 2010.

Optimal Arousal Identification and Classification for Affective Computing Using Physiological Signals: Virtual Reality Stroop Task. IEEE Transactions

on Affective Computing 1, 2 (July 2010), 109–118. https://doi.org/10.1109/T-AFFC.2010.126

Leave a Reply