Introduction

Virtual Reality (VR) is a rapidly evolving technology that has made significant strides in transforming how we interact with digital environments across a variety of industries, including gaming, education, healthcare, military training, and architecture. The continued growth and adoption of VR technologies emphasize the importance of creating immersive and interactive experiences, driving advancements in natural and seamless interaction techniques within virtual environments.

In the gaming industry, VR aims to provide players with an experience that is usually impossible to produce in the real world, through enhanced player engagement and immersion [1]. Education has seen the integration of immersive learning experiences that facilitate better comprehension and retention of complex subjects, by allowing multiple perspectives, situated learning and transfer [2]. Healthcare has been positively impacted by the introduction of VR for training medical professionals, treating phobias, and enabling physical therapy, expanding the range of treatment possibilities [3]. Military training has embraced VR for combat training and simulation, focusing on creating realistic and efficient training scenarios, leading to more cost effective solutions without loss or damage to humans or equipment [4]. In architecture, virtual walkthroughs have become invaluable tools for clients and stakeholders to visualise and evaluate design proposals more effectively, while VR technology can be important in areas such as, stakeholder engagement, design support, design review, construction support, operations and management support, and training [5].

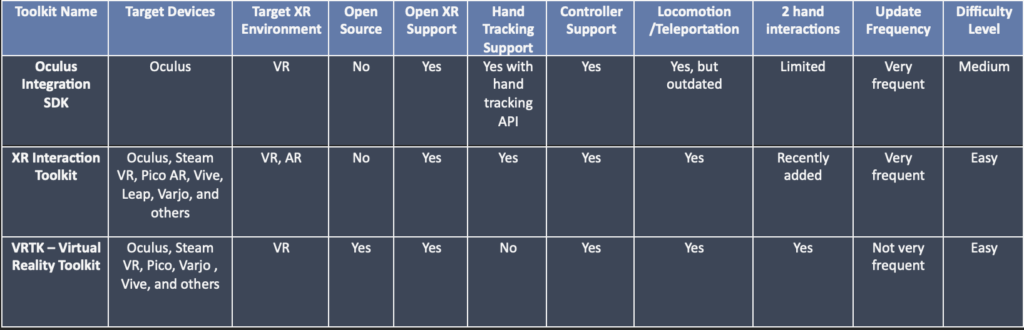

The enhancement of immersion in VR is intrinsically linked to the quality of interaction within virtual environments, covering aspects such as object manipulation, navigation, communication, and feedback mechanisms. To address this critical aspect of VR experiences, this piece aims to analyse and compare available toolkits and frameworks for developing immersive VR applications, with a particular focus on their interaction capabilities. The toolkits under investigation include the Unity XR Interaction Toolkit (XRI), Oculus Integration Toolkit, Virtual Reality Toolkit (VRTK), SteamVR Plugin, and Microsoft’s Mixed Reality Toolkit (MRTK).

By examining and contrasting these toolkits, this piece seeks to provide valuable insights and guidance for developers, researchers, and industry professionals aiming to create engaging and interactive VR applications across a diverse range of domains, ultimately contributing to the advancement and refinement of VR experiences.

Background and Related Work

The history of Virtual Reality (VR) interactions dates back to 1965, when the development of the first head-mounted display (HMD) by Ivan Sutherland laid the foundation for immersive experiences [6]. Since then, VR has experienced a remarkable evolution, with interaction techniques continuously improving to offer more realistic and natural experiences to users.

Initial VR systems utilised simplistic interaction techniques, such as keyboard inputs and basic hand gestures. As technology advanced, more sophisticated input devices emerged, such as the DataGlove, which allowed users to perform complex hand movements within a virtual environment [7]. Later, the introduction of motion controllers and haptic feedback devices, such as the Razer Hydra [8] and the Novint Falcon [9], further enhanced the sense of immersion and interaction in VR experiences.

Research on VR interactions has been a focal point in the field, with numerous studies examining the usability and effectiveness of various interaction techniques [1][10][11]. These studies have informed the development of newer interaction methods, such as hand tracking, which leverages computer vision and machine learning to enable users to manipulate objects in the virtual world using their hands directly.

With the rapid evolution of VR hardware and software, there is an increasing need for toolkits and frameworks that can streamline the development of immersive applications while providing robust and flexible interaction capabilities. Previous tools and methods, such as early VR development frameworks, focused primarily on basic interaction techniques and were limited in their support for more advanced functionalities. As a result, developers often had to create custom solutions for complex interactions, which could be time-consuming and resource-intensive [12]. These custom solutions often involved the development of novel interaction techniques or the adaptation of existing ones to fit specific application requirements, as seen in various studies and projects exploring new ways to interact with virtual environments [13].

The emergence of new toolkits and frameworks, such as the Unity XR Interaction Toolkit (XRI), Oculus Integration Toolkit, Virtual Reality Toolkit (VRTK), SteamVR Plugin, and Microsoft’s Mixed Reality Toolkit (MRTK), aims to address these challenges by providing developers with a comprehensive set of pre-built interaction features that can be easily integrated into VR applications. These toolkits not only facilitate rapid development but also help ensure a consistent and high-quality user experience across different platforms and devices.

In summary, the field of VR interactions has come a long way since its inception, with significant advancements in both hardware and software shaping the experiences of today. The development of robust toolkits and frameworks has been crucial in supporting this progress, enabling developers to create immersive and interactive applications that cater to a wide range of industries and use cases.

Overview of VR Interaction Toolkits

In recent years, a variety of toolkits and frameworks have emerged to facilitate the development of immersive and interactive VR applications. These toolkits provide developers with a comprehensive set of pre-built interaction features, enabling them to create engaging experiences without the need for extensive custom solutions. This section provides an overview of some of the most widely used VR interaction toolkits, highlighting their unique features, strengths, and limitations. By examining these toolkits, we aim to offer insights into the state-of-the-art VR interaction methods, allowing developers and researchers to make informed decisions when selecting the most suitable toolkit for their specific needs and use cases.

Unity XR Interaction Toolkit (XRI)

The Unity XR Interaction Toolkit is a comprehensive package developed by Unity Technologies that simplifies the creation of VR and AR applications within the Unity game engine. This cross-platform toolkit provides an extensive set of interaction components, locomotion systems, and user interface elements, making it a versatile choice for developers seeking to create immersive experiences with minimal effort. With version 2.3 of the XRI toolkit, they added three key features: eye gaze and hand tracking capabilities for more natural interactions, audiovisual affordances to bring interactions to life, and an improved device simulator to test in-Editor [14][25][26]. In the most recent version 2.4, they have released a new Climb Locomotion Provider and interactables and an Interaction Focus State that can help highlight objects to perform operations on [28].

Oculus Integration Toolkit

The Oculus Integration Toolkit is a package developed by Facebook-owned Oculus VR specifically for the Oculus Rift and Quest headsets. Designed to work seamlessly within the Unity game engine, this toolkit focuses on leveraging the unique features and capabilities of Oculus hardware, such as hand tracking and spatial audio, to deliver high-quality VR experiences for Oculus users. The most recent version of the Oculus Integration package is v56.0 (at time of writing) and it includes the OVRPlugin, as well as, SDKs’ for Audio, Platform, Lip-sync, Voice and Interaction. Meta has introduced new features to the VoiceSDK, OVRPlugin and InteractionSDK, while also making improvements and fixing issues across all the SDKs’ in the package [15]. Meta are very good for updating this package and are constantly improving how developers can create meaningful interactions with their products.

Virtual Reality Toolkit (VRTK)

VRTK is an open-source VR development framework, under the MIT Licence, that aims to simplify the creation of VR applications across multiple platforms. It offers a wide range of pre-built interaction components, such as grab mechanics, teleportation, and UI elements, which can be easily integrated into VR projects. VRTK is compatible with several VR devices, making it a flexible choice for developers seeking a platform-agnostic solution for VR development. VRTK released version 4 of their toolkit recently an introduction of many common features. Support for the older versions of VRTK seems to be limited and includes having to manually amend code for it to work, but they seem to have good visibility on different platforms to support developers using their toolkit [16].

Other Notable Toolkits

In addition to the three toolkits mentioned above, the SteamVR Plugin and Microsoft’s Mixed Reality Toolkit (MRTK) are also popular choices for VR development. The SteamVR Plugin, developed by Valve Corporation, is designed to work with the Unity game engine and supports a range of interaction systems for the SteamVR platform. It focuses on three main things for developers: loading 3D images, handling input from controllers and estimating what your hands look like while using the controllers [17]. Microsoft’s MRTK is a cross-platform development toolkit that supports VR and AR applications for Windows Mixed Reality headsets and the HoloLens device. It won “Best Developer Tool” in 2021 and they have just released MRTK3 to the public as a preview [18]. Both toolkits provide developers with a variety of interaction components tailored to their respective hardware ecosystems.

In the following sections, we will delve into the specific interaction features offered by each of these toolkits, highlighting their unique strengths and weaknesses to help developers make informed decisions when selecting the most appropriate toolkit for their needs.

Interactions

Controller Interactions:

Controller interactions are quite similar between the different toolkits. There are two different ways that controllers are tracked by the VR headset that can change the performance or accuracy of the interactions. Lighthouse tracking system is one way to track VR controllers in the VR space and is used by the Valve Index and HTC Vive Pro headsets. It needs at least two base stations in the play area as reference points between the controllers and headset. The other tracking system is called inside-out tracking which is used by Oculus Quest 2 headsets [29]. In this tracking system, LED’s are located in the ring on the top of the controller and the camera on the headset detects the LED’s, continuously taking images of them. The tracking system then triangulates the position of the controllers in space [29].

The interactions in the VR environment are usually quite intuitive and while the choice of buttons mapped to actions might vary between headsets and depending on the game or application you are using, the choice is usually based on what is best for the users experience and to accomplish the task at hand.

Hand Interactions:

Hand interactions have significantly improved in recent years, with XRI, Oculus, and VRTK all capable of facilitating a wide range of interactions using both users’ hands and controllers. The Oculus Integration package excels at hand tracking, providing realistic finger movements compared to the other toolkits. Additionally, it features a user-friendly Hand Grab Pose recorder for creating realistic hand grab positions when interacting with objects. However, this recorder is only compatible with Android, and Mac-based Unity developers must manually create these poses, which can be time-consuming and less natural [19].

While VRTK lacks hand tracking capabilities and lags behind the other two toolkits in hand interactions, XRI plans to enhance two-handed interactions and articulated hand tracking by employing external cameras [20]. Currently, Oculus Integration offers superior hand tracking through OVR Hand and OVR Controller components, but its performance is optimized for Oculus VR headsets.

One issue I have come across with interactions recently is tracking both controllers and hands at the same time. This issue might not be an issue in the future with the Quest Pro HMD having the capability for tracking both simultaneously. It is an issue that is not related to the toolkit but seems to be a headset issue. I want to use the controller to track real object in a virtual space while also having both hands free to interact with the object. I have tried a few methods to over come this obstacle like, using a NGIMU sensor to track to object, attaching the object to the camera rig and using a Vive Tracker. The NGIMU sensor worked great for rotation but an additional GPS sensor did not have the accuracy needed. The Vive tracker seems like it’s the best option, however the compatibility with working on a Mac device and having to use the SteamVR plugin make this option not possible at the moment. Hopefully these issues will not remain in the future as the headsets improve and Apple come into the VR headset market, there will be more software and hardware that are compatible with Apple devices.

Locomotion

Locomotion is a fundamental aspect of every VR project and is a primary focus of all the toolkits. It is essential to understand and plan for locomotion in VR environments, as different locomotion types suit different projects. Teleportation, a popular locomotion type in VR, helps reduce motion sickness by allowing users to instantly teleport to a pointed location within the virtual environment. However, it can also break the users sense of immersion with the use of visual “jumps”. Blink movement is another method that further minimises motion sickness by fading the screen to black during the instant transition.

Smooth locomotion, also known as direct movement, provides a more immersive experience by enabling users to navigate using a joystick or touchpad on their VR controllers, but it may induce motion sickness in some individuals. All three toolkits offer pre-built components for these locomotion types. Advanced locomotion techniques, such as arm-swinger, walking-in-place, room-scale, and vehicle-based locomotion, are not widely available but can be implemented with additional equipment or specific HMDs, such as the Oculus Quest 2 [21][27].

Gaze Interaction

Gaze interaction is not universally available on VR devices and is typically reserved for high-end or newer devices, such as HoloLens 2, Meta Quest Pro, and PlayStation VR2, due to the required sensors for tracking users’ gaze [14]. As a result, gaze interaction has only recently become a priority for toolkits. XRI has introduced a new gaze interactor called XR Gaze Interactor, driven by eye-gaze or head-gaze poses. In contrast, the Oculus Integration package supports eye tracking exclusively on the Meta Quest Pro using the OVREyeGaze script alongside the OVRPlugin and Movement SDK [22]. VRTK does not mention gaze interaction support, but open-source components for the OpenXR package may exist. Gaze interaction is not readily available as a featured component in the toolkit.

With the increasing availability of consumer HMDs capable of eye-tracking, research in this area has accelerated [23]. Researchers have developed custom gaze-based interaction toolkits to better understand this interaction method in VR/AR environments. For instance, a gaze interaction toolkit called EyeMRTK has been created, featuring six different sample methods [24]. The inclusion of gaze interactions as a standard interaction method, combined with components like interaction groups in the new version of the XRI toolkit, allows the development of projects with prioritized, non-conflicting interactors. This innovation not only enriches user interaction with VR/AR applications but also enhances accessibility.

Pros and Cons

XRI Toolkit Pros:

- Developed by Unity Technologies, ensuring seamless integration with the Unity game engine.

- Supports cross-platform development for both VR and AR applications.

- Comprehensive set of interaction components, locomotion systems, and user interface elements.

- Regular updates with new features, such as eye gaze and hand tracking capabilities.

XRI Toolkit Cons:

- Hand tracking and two-handed interactions may not be as refined as those provided by the Oculus Integration Toolkit.

- May not fully leverage unique features and capabilities of specific VR hardware, such as Oculus headsets.

Oculus Integration Toolkit Pros:

- Developed by Oculus VR, specifically tailored for Oculus Rift and Quest headsets.

- Highly refined hand tracking capabilities, providing realistic finger movements.

- Offers unique features and capabilities, such as hand tracking and spatial audio.

- Regular updates with improvements and new features.

Oculus Integration Toolkit Cons:

- Limited to Oculus VR headsets.

- Hand Grab Pose recorder may not work well with non-Android development environments, requiring manual pose creation.

VRTK Pros:

- Open-source and platform-agnostic, supporting multiple VR devices.

- Wide range of pre-built interaction components available for easy integration.

- Licensed under the MIT License, promoting open development and collaboration.

VRTK Cons:

- Lacks native hand tracking capabilities.

- Older versions of the toolkit may have limited support and require manual code adjustments.

- May not leverage unique features and capabilities of specific VR hardware as well as the Oculus Integration Toolkit.

Discussion

In this review, we have analyzed and compared three popular VR interaction toolkits: Unity XR Interaction Toolkit (XRI), Oculus Integration Toolkit, and Virtual Reality Toolkit (VRTK). Each toolkit offers a unique set of features, strengths, and limitations, making them suitable for different use cases and developer preferences. Our comparison focused on three key aspects of interaction in VR: hand interaction, locomotion, and gaze interaction.

Regarding hand interaction, the Oculus Integration Toolkit emerged as the most accurate and advanced in terms of hand tracking and gesture recognition. However, its primary limitation is that it is optimized for Oculus VR headsets, which may not be ideal for developers targeting multiple platforms. The XRI toolkit offers solid hand interaction capabilities with plans for future improvements, while VRTK lags behind in this aspect. Tracking more than the hands or controllers has to be overcome to with future toolkits along with improvement in how the HMD’s track the space. For more interesting experiences having affordable ways to track a mix of hands and controllers or tracking both will provide developers with tools to create new interactions with in the VR space.

For locomotion, all three toolkits provide basic methods, such as teleportation and smooth locomotion. However, more specialized locomotion techniques like arm-swinger or vehicle-based locomotion are not widely supported and may require additional hardware or software components.

Gaze interaction is a relatively new area of focus for VR toolkits, as it is only supported by a limited number of high-end or recent VR devices. Both the XRI and Oculus Integration Toolkits have implemented gaze interaction capabilities, while VRTK currently does not provide built-in support for this feature. As gaze interaction becomes more prevalent, it is expected that these toolkits will continue to evolve and incorporate more advanced gaze-based interaction techniques.

Conclusion

In conclusion, selecting the appropriate VR interaction toolkit depends on several factors, including the target platform, desired interaction features, and the developer’s familiarity with the underlying game engine. The Unity XR Interaction Toolkit (XRI) offers a comprehensive and cross-platform solution, making it an excellent choice for developers seeking a versatile and easily accessible toolkit. The Oculus Integration Toolkit excels in hand tracking and gesture recognition, making it ideal for developers targeting Oculus VR devices. Lastly, the Virtual Reality Toolkit (VRTK) provides an open-source, platform-agnostic solution, though it may require additional customization and integration for advanced interaction features.

As the field of VR continues to grow and evolve, developers and researchers must carefully consider the available toolkits and frameworks to create engaging, interactive experiences that cater to diverse industries and use cases. By understanding the strengths and limitations of these toolkits, developers can make informed decisions when selecting the most appropriate toolkit for their specific needs, ultimately contributing to the refinement and advancement of VR experiences.

References:

[1] D. A. Bowman and R. P. McMahan, ‘Virtual Reality: How Much Immersion Is Enough?’, Computer, vol. 40, no. 7, pp. 36–43, Jul. 2007, doi: 10.1109/MC.2007.257.

[2] C. Dede, ‘Immersive Interfaces for Engagement and Learning’, Science (American Association for the Advancement of Science), vol. 323, no. 5910, pp. 66–69, 2009, doi: 10.1126/science.1167311.

[3] A. Rizzo and S. T. Koenig, ‘Is clinical virtual reality ready for primetime?’, Neuropsychology, vol. 31, no. 8, pp. 877–899, Nov. 2017, doi: 10.1037/neu0000405.

[4] A. Lele, ‘Virtual reality and its military utility’, J Ambient Intell Human Comput, vol. 4, no. 1, pp. 17–26, Feb. 2013, doi: 10.1007/s12652-011-0052-4.

[5] J. M. Davila Delgado, L. Oyedele, P. Demian, and T. Beach, ‘A research agenda for augmented and virtual reality in architecture, engineering and construction’, Advanced Engineering Informatics, vol. 45, p. 101122, Aug. 2020, doi: 10.1016/j.aei.2020.101122.

[6] A. Grabowski and K. Jach, ‘The use of virtual reality in the training of professionals: with the example of firefighters’, Computer Animation and Virtual Worlds, vol. 32, no. 2, p. e1981, 2021, doi: 10.1002/cav.1981.

[7] T. G. Zimmerman, J. Lanier, C. Blanchard, S. Bryson, and Y. Harvill, ‘A hand gesture interface device’, SIGCHI Bull., vol. 18, no. 4, pp. 189–192, May 1986, doi: 10.1145/1165387.275628.

[8] ‘Razer Hydra’, Wikipedia. Feb. 13, 2023. Accessed: Apr. 05, 2023. [Online]. Available: https://en.wikipedia.org/w/index.php?title=Razer_Hydra&oldid=1139131969

[9] ‘Novint Technologies’, Wikipedia. Aug. 21, 2022. Accessed: Apr. 05, 2023. [Online]. Available: https://en.wikipedia.org/w/index.php?title=Novint_Technologies&oldid=1105664091#Novint_Falcon

[10] R. P. McMahan, D. Gorton, J. Gresock, W. McConnell, and D. A. Bowman, ‘Separating the effects of level of immersion and 3D interaction techniques’, in Proceedings of the ACM symposium on Virtual reality software and technology, in VRST ’06. New York, NY, USA: Association for Computing Machinery, Nov. 2006, pp. 108–111. doi: 10.1145/1180495.1180518.

[11] R. P. McMahan, ‘Exploring the Effects of Higher-Fidelity Display and Interaction for Virtual Reality Games’, Dec. 2011, Accessed: Apr. 05, 2023. [Online]. Available: https://vtechworks.lib.vt.edu/handle/10919/30123

[12] J. J. LaViola, D. A. Feliz, D. F. Keefe, and R. C. Zeleznik, ‘Hands-free multi-scale navigation in virtual environments’, in Proceedings of the 2001 symposium on Interactive 3D graphics, in I3D ’01. New York, NY, USA: Association for Computing Machinery, Mar. 2001, pp. 9–15. doi: 10.1145/364338.364339.

[13] M. R. Mine, F. P. Brooks, and C. H. Sequin, ‘Moving objects in space: exploiting proprioception in virtual-environment interaction’, in Proceedings of the 24th annual conference on Computer graphics and interactive techniques, in SIGGRAPH ’97. USA: ACM Press/Addison-Wesley Publishing Co., Aug. 1997, pp. 19–26. doi: 10.1145/258734.258747.

[14] ‘Eyes, hands, simulation, and samples: What’s new in Unity XR Interaction Toolkit 2.3 | Unity Blog’. https://blog.unity.com/engine-platform/whats-new-in-xr-interaction-toolkit-2-3 (accessed Apr. 07, 2023).

[15] ‘Downloads – Oculus Integration SDK’. https://developer.oculus.com/downloads/package/unity-integration/(accessed Apr. 07, 2023).

[16] ‘VRTK – Virtual Reality Toolkit – [ VR Toolkit ] | Integration | Unity Asset Store’. https://assetstore.unity.com/packages/tools/integration/vrtk-virtual-reality-toolkit-vr-toolkit-64131 (accessed Apr. 07, 2023).

[17] ‘SteamVR Unity Plugin | SteamVR Unity Plugin’. https://valvesoftware.github.io/steamvr_unity_plugin/index.html (accessed Apr. 07, 2023).

[18] ‘What is the Mixed Reality Toolkit’. Microsoft, Apr. 07, 2023. Accessed: Apr. 07, 2023. [Online]. Available: https://github.com/microsoft/MixedRealityToolkit-Unity

[19] ‘Create a Hand Grab Pose (Android) | Oculus Developers’. https://developer.oculus.com/documentation/unity/unity-isdk-creating-handgrab-poses/ (accessed Apr. 07, 2023).

[20] ‘XR Interaction Toolkit – Unity Platform – AR/VR | Product Roadmap’. https://portal.productboard.com/brs5gbymuktquzeomnargn2u/tabs/8-xr-interaction-toolkit (accessed Apr. 07, 2023).

[21] P. T. Wilson, W. Kalescky, A. MacLaughlin, and B. Williams, ‘VR locomotion: walking > walking in place > arm swinging’, in Proceedings of the 15th ACM SIGGRAPH Conference on Virtual-Reality Continuum and Its Applications in Industry – Volume 1, Zhuhai China: ACM, Dec. 2016, pp. 243–249. doi: 10.1145/3013971.3014010.

[22] ‘Eye Tracking for Movement SDK for Unity: Unity | Oculus Developers’. https://developer.oculus.com/documentation/unity/move-eye-tracking/ (accessed Apr. 07, 2023).

[23] I. B. Adhanom, P. MacNeilage, and E. Folmer, ‘Eye Tracking in Virtual Reality: a Broad Review of Applications and Challenges’, Virtual Reality, Jan. 2023, doi: 10.1007/s10055-022-00738-z.

[24] D. Mardanbegi and T. Pfeiffer, ‘EyeMRTK: a toolkit for developing eye gaze interactive applications in virtual and augmented reality’, in Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications, Denver Colorado: ACM, Jun. 2019, pp. 1–5. doi: 10.1145/3317956.3318155.

[25] ‘XR Interaction Toolkit Examples – Version 2.3.0’. Unity Technologies, Apr. 06, 2023. Accessed: Apr. 07, 2023. [Online]. Available: https://github.com/Unity-Technologies/XR-Interaction-Toolkit-Examples

[26] ‘XR Interaction Toolkit | XR Interaction Toolkit | 2.3.0’. https://docs.unity3d.com/Packages/com.unity.xr.interaction.toolkit@2.3/manual/index.html (accessed Apr. 07, 2023).

[27] E. Bozgeyikli, A. Raij, S. Katkoori, and R. Dubey, ‘Locomotion in virtual reality for room scale tracked areas’, International Journal of Human-Computer Studies, vol. 122, pp. 38–49, Feb. 2019, doi: 10.1016/j.ijhcs.2018.08.002.

[28] ‘What’s new in version 2.4.0 | XR Interaction Toolkit | 2.4.3’. https://docs.unity3d.com/Packages/com.unity.xr.interaction.toolkit@2.4/manual/whats-new-2.4.0.html (accessed Aug. 24, 2023).

[29] ‘Overview of VR Controllers: The Way of Interacting With the Virtual Worlds’. https://circuitstream.com/blog/vr-controllers-the-way-of-interacting-with-the-virtual-worlds (accessed Aug. 25, 2023).

Leave a Reply